Can teacher performance pay improve student achievement?

Why is this question important? The available evidence strongly suggests that teachers rank number one in factors influencing student performance (Babu & Mendro, 2003). A central issue facing educators is how to amplify teacher effectiveness? One strategy with enormous potential is performance compensation packages for teachers: performance pay, merit pay, incentive pay, or pay-for-performance. But what does research tell us about the effectiveness of incentive packages?

See further discussion below.

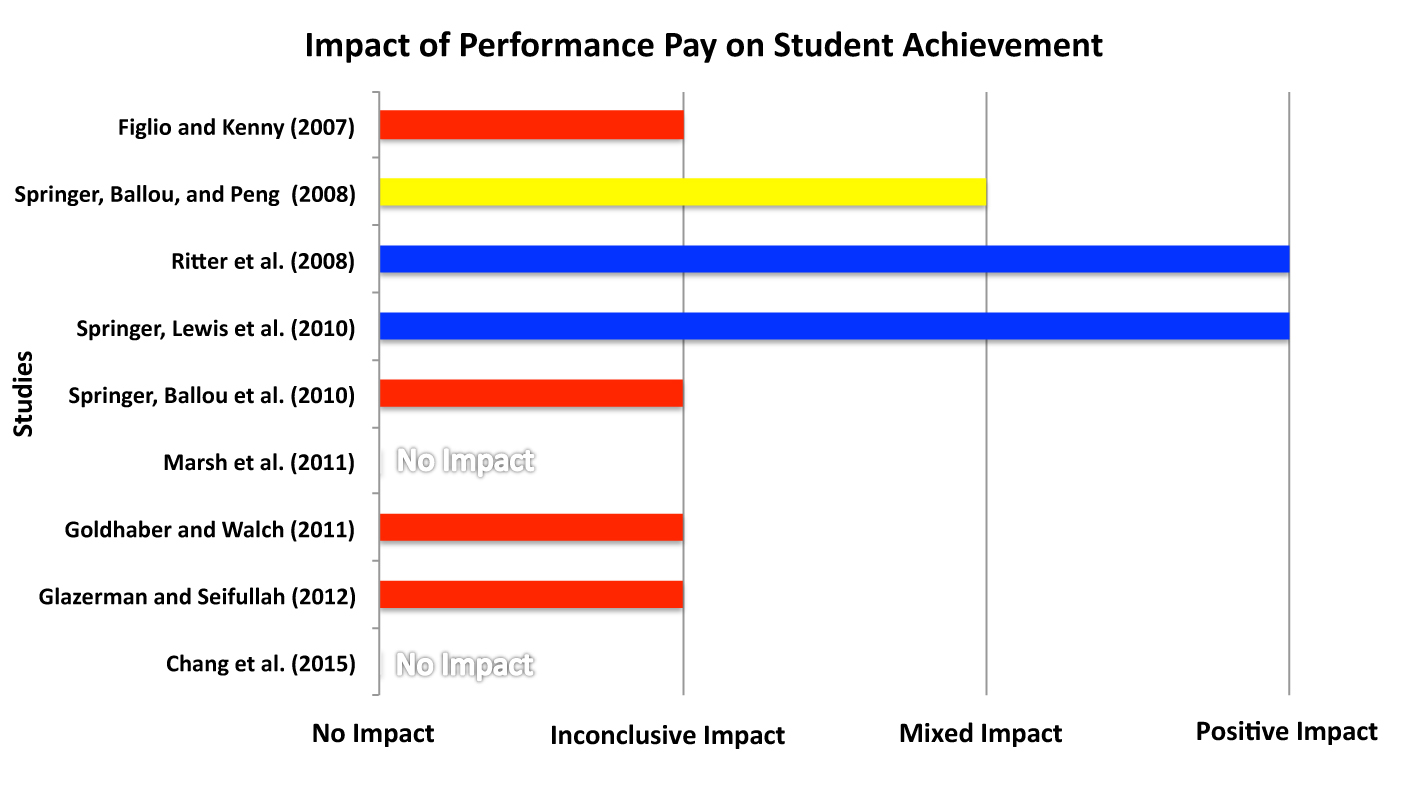

Result(s): Although performance compensation systems have been established in many schools, research offers practitioners a mixed picture about what to expect from this potentially important tool. A literature search conducted by the Wing Institute identified only one meta-analysis on this topic (Condly, Clark, & Stolovitch, 2003). This study found a large effect size of 0.70 for performance compensation in schools, but the study did not include student achievement measures as was required for inclusion in this analysis. To be included in this research summary, a study needed to employ quantitative methods for measuring student achievement. Nine studies met the criteria for inclusion, five found either mixed impacts or were inconclusive. Of the remaining four studies, two confirmed positive impacts and two found no positive effects for the use of the intervention.

The overall conclusion is that the impact of teacher performance compensation on student achievement remains unclear. There are currently 3.5 billion teachers in the United States. Costs of large-scale implementation of performance compensation for at best small effects suggests that policy makers remain cautious before investing in the intervention.

Individual Study Results

Figlio and Kenny (2007): This study demonstrated that students learned more in schools that implemented performance compensation systems, but the design of the study could not determine whether the effect was a function of the incentives or that better schools were likely to select performance pay programs as a performance improvement strategy.

Springer, Ballou, and Peng (2008): This study found positive effects for the Teacher Advancement Program (TAP) incentive program on student test gains in grades 2 through 6. The study failed to find positive outcomes at the middle school and high school levels. The authors concluded that issues of treatment integrity might have played a role in the poor results for middle schools and high schools.

Ritter et al. (2008): This study examined the effectiveness of the Achievement Challenge Pilot Project (ACPP) in improving student scores on standardized exams. It found positive results in multiple subject areas. Students in schools where teachers were eligible for bonuses outperformed students in schools where teachers were not eligible; they outperformed them by almost 7 percentile points in mathematics, approximately 9 percentile points in language, and nearly 6 percentile points in reading.

Springer, Ballou, et al. (2010): The Project on Incentives in Teaching (POINT) was a 3-year study conducted in middle schools in Nashville, Tennessee, from 2006–2007 through 2008–2009. The study assessed the effect of performance pay on student academic gains in mathematics compared with a control group's gains. The study found no significant improvement on standardized test scores for students taught by teachers receiving performance compensation.

Springer, Lewis, et al. (2010): This report presented findings from the District Awards for Teacher Excellence (D.A.T.E.) performance compensation program. The study found student achievement gains were higher in D.A.T.E. schools compared with non-D.A.T.E. schools.

Gains in reading during D.A.T.E. participation were approximately 0.01 of a standard deviation higher than at non-D.A.T.E. schools, and approximately 0.03 of a standard deviation higher in mathematics.

Goldhaber and Walch (2011): This study examined data from the Denver Public School's Professional Compensation System for Teachers (ProComp) and found student gains across grades and subjects. It reported a one standard deviation increase in teacher effectiveness to correspond to an increase of 0.09 to 0.18 standard deviations in student achievement. To keep this in perspective, research suggests that the differential between a new teacher (0 years of prior experience) and a second-year teacher (1 year of prior experience) is in the range of 0.01 to 0.07 standard deviations of student achievement (Rivkin, Hanushek, & Kain, 2005). Unfortunately, the results were unclear and often inconsistent across grades and outcomes. It is also important to note that the design of the study made it impossible to separate ProComp system effects from other unobserved factors that affected student achievement during the ProComp intervention.

Marsh et al. (2011): The purpose of this study was to evaluate the implementation and effect of the New York City Schoolwide Performance Bonus Program (SPBP). This was a voluntary, 3-year program that offered financial incentives to teachers based on school-level performance in high-needs elementary, middle, and high schools. The researchers examined both school and student outcomes to determine if performance compensation improved the teacher's performance. The study found no positive effects on student achievement at any grade level. These results held true at both student- and school-level outcomes.

Glazerman and Seifullah (2012): This study looked at the effects of the Teacher Advancement Program (TAP) in Chicago Public Schools. The Chicago TAP program is based on a national TAP model developed by the Milken Family Foundation. The report presented findings from the 4-year implementation period, 2007–2008 through 2010–2011. Overall, the program did not raise student achievement scores. Evidence was found for both positive and negative test scores in years and schools, but there was no consistent or significant impact on mathematics, reading, or science scores.

Chang et al. (2015): This study focused on performance-based compensation systems established under a Teacher Incentive Fund (TIF) federal grant awarded in 2010. Performance pay had positive but small effects on student reading and mathematics. Students in schools with performance compensation systems scored 0.03 standard deviations higher in reading in the first 2 years than did their peers in control schools. Math scores were similarly higher for the treatment schools employing performance pay systems; the first year saw a 0.02 higher standard deviation and the second year a 0.04 higher standard deviation. The authors did not consider these results to be socially or statistically significant.

Implication(s): This examination of performance pay research helps to illustrate issues that policymakers and practitioners commonly face when adopting performance improvement initiatives. One concern is the grouping of significantly different practices under the same name. The practices are analyzed as if they are equivalent interventions when, in fact, they are very different. The resulting meta-analysis or research synthesis may produce false negative conclusions and the premature abandonment of potentially viable interventions, or it may produce false positive results and the adoption of ineffective practices.

This research summary found that different performance compensation plan designs found among the nine studies could explain the inconclusive results. These differences included the following factors: compensation amounts varied significantly across models; some systems compensated teachers for individual performance while others paid for group performance; payments were dissimilar and often significantly delayed; treatment integrity was frequently mentioned as a significant obstacle; and different student populations were targeted. All of these factors complicated a collective comparison.

The second concern is the propensity of school systems to adopt packages constructed from practices that research has shown to be ineffective. Current research offers ample evidence of incentive practices that are successful. This research suggests that packages most likely to produce positive results are ones with clear goals and objective performance standards designed to best achieve the goals; ongoing and timely feedback for performance in relation to the goals and standards; and immediate and powerful behavioral consequences (Alvero, Bucklin, & Austin, 2001). Unfortunately, the WingInstitute finds the bulk of the packages included in this analysis substituted ineffective components for empirically supported practices that would have increased the effectiveness of the packages.

In summary, additional research needs to be conducted on this topic. This new research should examine the impact of performance compensation packages covering similar practices selected from the best available evidence on what works and implemented with high treatment integrity across similar populations and grade levels.

Study Description:

Alvero, Bucklin, and Austin (2001). This study is a literature review of 43 studies examining the use of feedback to improve on-the-job performance. The review found that, to be effective, feedback should be considered in conjunction with goals, standards, timely coaching, and consequences.

Figlio, and Kenny. (2006). This statistical analysis combined data from 2,000 schools represented in the National Education Longitudinal Survey (NELS) on schools, students, and their families with data from the Schools and Staffing Survey (SASS) and the study's own survey of more than 1,300 schools included in the NELS survey.

Springer, Ballou, and Peng (2008): This study provided findings from 1,200 schools on the impact of the Teacher Advancement Program (TAP) on student test scores in mathematics. TAP is a comprehensive school reform model to improve teacher instructional effectiveness and to elevate student achievement. The study established the treatment effect using an ordinary least squares (OLS) regression analysis to compare student test scores in mathematics in schools participating in TAP with those in non-TAP schools.

Ritter et al. (2008): This study used a regression analysis model to assess the impact of the ACPP performance pay program for student/teacher cohorts. The researchers compared the scores before and after the implementation of the performance pay program for the school years 2004–2005, 2005–2006, and 2006–2007.

Springer, Ballou, et al. (2010): POINT was a 3-year study that used a value-added hierarchical linear modeling analysis to compare gains of students taught by teachers participating in the pay-for-performance program with those of students taught by teachers not in the program.

Springer, Lewis, et al. (2010): This study used simple frequency tables and descriptive statistics for selected measures, analysis of variance to compare mean responses of teachers, and hierarchical linear modeling. The results did not necessarily imply a direct causal connection between D.A.T.E. participation and student achievement gains because students were not randomly assigned to study and control groups.

Goldhaber and Walch (2011): This study examined data from the Denver Public School's ProComp for school years 2002–2003 to 2009–2010. In 2010, the program included approximately 80,000 students and 4,500 teachers. The research used value-added higher linear modeling to establish a relationship between performance compensation and improved student achievement.

Marsh et al. (2011): This study used both qualitative and quantitative data to evaluate the impact of New York City's incentive program, SPBP. It conducted surveys, site visits, interviews, and case studies. In addition, the study compared the test scores of students enrolled in schools participating in SPBP with those eligible for but not enrolled in the program. A lottery was used to assure participants chosen for inclusion in the study were comparable. Unfortunately, not all schools followed the lottery protocols. Rather than use a simple analytical approach, the study implemented the intent-to-treat (ITT) analysis to compensate for the problems encountered in implementing the lottery (Shadish, Cook, & Campbell, 2002).

Glazerman and Seifullah (2012): The methodology used for Chicago TAP analysis was based on a hybrid design that depended on both random assignment experimental and quasi-experimental designs. The need for the quasi-experimental design was based on the small number of schools and years that the schools qualified for random assignment. The study found that TAP did not consistently raise student achievement as measured by growth in Illinois Standards Achievement Test (ISAT) scores. It also found evidence for both positive and negative impacts in selected subjects, years, and cohorts of schools, but, most important, no discernable effect on math, reading, or science achievement.

Chang et al. (2015): This study employed an experimental design to assess the impact of performance pay on educator and student outcomes. The 132 high-needs elementary and middle schools included in the study were randomly assigned to a treatment or a control group. The pertinent student outcome for this data mining analysis was student test scores in mathematics and reading. The report found that full implementation of the performance pay system was a challenge, and after 2 years the majority of schools reported problems in sustaining the initiative.

Definition(s):

False negative: The result of research that indicates a practice is not effective when the practice actually does work.

False positive: The result of research that indicates a practice is effective when the practice actually does not work.

Package: An intervention comprising multiple practice elements.

Practice elements: The core elements or key foundation components that can be independently researched.

Citation:

Alvero, A. M., Bucklin, B. R., & Austin, J. (2001). An objective review of the effectiveness and essential characteristics of performance feedback in organizational settings (1985-1998). Journal of Organizational Behavior Management, 21(1), 3–29.

Babu, S., & Mendro, R. (2003) Teacher accountability: HLM-based teacher effectiveness indices in the investigation of teacher effects on student achievement in a state assessment program. Presented at the annual meeting of AERA, April 2003, Chicago, IL.

Chang, H., Wellington, A., Hallgren, K., Speroni, C., Herrmann, M., Glazerman, S., & Constantine, J. (2015). Evaluation of the teacher incentive fund: Implementation and impact of pay-for-performance after two years. (NCEE 2015-4020.) Washington, DC: National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, U.S. Department of Education.. Retrieved from http://ies.ed.gov/pubsearch/pubsinfo.asp?pubid=NCEE20154020.

Condly, S. J., Clark, R. E., & Stolovitch, H. D. (2003). The effects of incentives on workplace performance: A meta-analytic review of research studies. Performance Improvement Quarterly, 16(3), 46–63.

Figlio, D., & Kenny, L. (2007. Individual teacher incentives and student performance. Journal of Public Economics, 91, 901–914. Retrieved from http://faculty.smu.edu/millimet/classes/eco7321/papers/figlio%20kenny.pdf.

Glazerman, S., & Seifullah, A. (2012). An evaluation of the Chicago Teacher Advancement Program (Chicago TAP) after four years. Washington, DC: Mathematica Policy Research. Retrieved from: http://www.mathematica-mpr.com/publications/PDFs/education/TAP_year4_impacts.pdf.

Goldhaber, D., & Walch, J. (2011). Strategic pay reform: A student outcomes–based evaluation of Denver's ProComp teacher pay initiative. (Working Paper 2011-3.0.) Seattle, WA: Center for Education Data & Research. Retrieved from http://www.cedr.us/papers/working/CEDR%20WP%202011-3_Procomp%20Strategic%20Compensation%20(9-28).pdf.

Marsh, J. A., Springer, M. G., McCaffrey, D. F., Yuan, K., Epstein, S., Koppich, J., Peng, X. (2011). A big apple for educators: New York City's experiment with schoolwide performance bonuses: Final evaluation report. Santa Monica, CA: RAND Corporation. Retrieved from http://www.rand.org/content/dam/rand/pubs/monographs/2011/RAND_MG1114.pdf.

National Center for Education Statistics. (1993). School and Staffing Survey (SASS). Retrieved from http://nces.ed.gov/surveys/sass/dataproducts.asp.

Pennsylvania Clearinghouse for Education Research (PACER). (2012) Issue brief: Performance pay for teachers. Philadelphia, PA: Research for Action. Retrieved from http://www.researchforaction.org/publication-listing/?id=1179.

Ritter, G., Holley, M., Jensen, N., Riffel, B., Winters, M., Barnett, J., & Greene, J. (2008). Year two evaluation of the Achievement Challenge Pilot Project in the Little Rock Public School District. Fayetteville, AR: Department of Education Reform, University of Arkansas. Retrieved from

http://www.researchgate.net/profile/Marcus_Winters/publication/237213205_Year_Two_Evaluation_of_the_Achievement_Challenge_Pilot_Project_in_the_Little_Rock_Public_School_District/links/54eb1c5a0cf25ba91c85c0e8.pdf.

Rivkin, S. G., Hanushek, E. A., & Kain, J. F. (2005). Teachers, schools, and academic achievement. Econometrica, 73(2), 417–458.

Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Experimental and quasi-experimental designs for generalized causal inference. Boston, MA: Houghton Mifflin.

Springer, M. G., Ballou, D., Hamilton, L., Le, V., Lockwood, J. R., McCaffrey, D. F., Stecher, B. M. (2010). Teacher pay for performance: Experimental evidence from the Project on Incentives in Teaching. Nashville, TN: National Center on Performance Incentives at Vanderbilt University. Retrieved from

http://www.performanceincentives.org/data/files/pages/POINT%20REPORT_9.21.10.pdf.

Springer, M. G., Ballou, D., & Peng, X. (2008). Impact of the Teacher Advancement Program on student test score gains: Findings from an independent appraisal. Nashville, TN: National Center on Performance Incentives at Vanderbilt University.

Springer, M. G., Lewis, J. L., Ehlert, M. W., Podgursky, M. J., Crader, G. D., Taylor, L. L.,…Stuit, D. A. (2010). District Awards for Teacher Excellence (D.A.T.E.) program: Final evaluation report. Nashville, TN: National Center on Performance Incentives at Vanderbilt University. Retrieved from

https://my.vanderbilt.edu/performanceincentives/files/2012/10/FINAL_DATE_REPORT_FOR_NCPI_SITE4.pdf.

Springer, M. G., Podgursky, M. J., Lewis, J. L., Ehlert, M. W., Ghosh-Dastidar, B., Gronberg, T. J., Taylor, L. L. (2008). Texas Educator Excellence Grant (TEEG) program: Year one evaluation report. Nashville, TN: National Center on Performance Incentives at Vanderbilt University. Retrieved from http://www.performanceincentives.org/data/files/news/BooksNews/200802_SpringerEtAl_TEEG_Year1.pdf.

Chang, H., Wellington, A., Hallgren, K., Speroni, C., Herrmann, M., Glazerman, S., & Constantine, J. (2015). Evaluation of the teacher incentive fund: Implementation and impact of pay-for-performance after two years. (NCEE 2015-4020.) Washington, DC: National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, U.S. Department of Education.. Retrieved from http://ies.ed.gov/pubsearch/pubsinfo.asp?pubid=NCEE20154020.