Understanding Research

What you will find in this section

"Understanding Research" is a resource to assist in more effectively interpreting the complicated and often confusing field of education research. Without regularly reading research, anyone might find it challenging to understand what a particular study offers policy makers, practitioners, or parents in guiding useful decision making. How does a person decide what works, what doesn’t work, when it’s working, and what alternative choices there are if it isn’t working?

"Understanding Research" describes:

1. Various research techniques:

- The most common methods of research available in the field of education.

- The strengths and weaknesses of the different methods.

2. How to establish the quality of research:

- What to look for in a study when you want to obtain answers of cause and effect; that is, does this practice produce predictable outcomes?

- The importance of replicating research results through multiple studies produced by different researchers.

3. How to interpret the results: The importance of two valuable research tools.

- Effect size

- Meta-analysis

What is Research?

Research is the process of collecting and analyzing information to increase our understanding of phenomena. This process of inquiry can be as simple as investigating through observation or as complex as experimenting to establish a cause-effect relationship for the events we investigate. Information derived from systematically accumulated observations and investigations can be organized into theories and laws about how the world operates. In taking research to practice, we translate these theories and laws into programs and treatments. We do this so that we can reliably achieve desired results when we consistently implement programs and treatments. Research enables policy makers and practitioners to make smart decisions. For example, when testing a new reading program, researchers have a concept of how we want it to look. We also have a picture of how we want the program to affect a student’s reading ability and also how we plan to measure this effect. By conducting research, we increase the likelihood that our reading program will reliably produce competent readers over time.

What is achieved through research:

1. Replicable results.

2. Results that best match educators expectations for quality.

3. Results that reduce the risk of students falling behind expectations. (Each year that students fall behind, the harder it is for them, and the less likely they are, to catch.)

4. Identification of flaws and deficits in current practices.

5. Increased likelihood of success for students in future grades.

Various research techniques

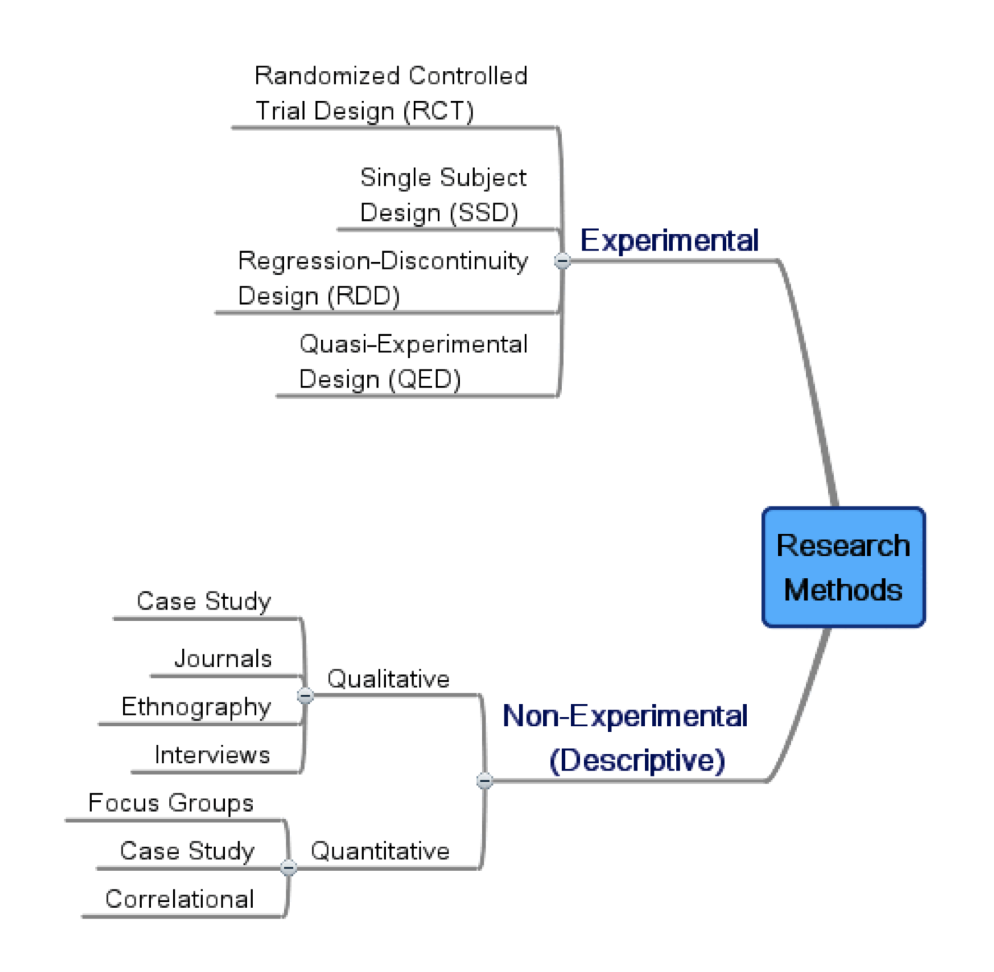

Qualitative and Quantitative Research

Research studies are categorized as qualitative or quantitative.

Qualitative research is an umbrella term referring to descriptive methods that produce narratives of observations and interviews, and document analysis.

Quantitative methods rely on the collecting and analyzing numerical data derived from research for the purpose of describing and explaining phenomena.

Non-Experimental and Experimental Research

Research is generally classified into one of two categories: non-experimental and experimental inquiries.

1. Non-Experimental Research

This is the most common form of research. A non-experimental design describes observed events without influencing the experience. This is a strong type of research when the intent is not to manipulate variables but merely to describe phenomena. These designs are especially important when little is known about a phenomenon and the researcher needs to collect sufficient information before proposing experimental studies. Non-experimental designs are weakest with respect to internal validity, the ability to assess the cause-effect relationships between an intervention and its outcomes.

Simple and Comparative Descriptive Designs

These designs are used simply to describe what is observed or to compare different observations.

For example, a researcher administers a survey to a sample of first-year teachers in order to describe the characteristics of novice teachers. Simple descriptive studies include ethnographies, journals, qualitative case studies, and interviews.

Correlational Research Design

This research, a form of quantitative descriptive research, receives much attention in the media. It is used to describe the statistical association between two or more variables. Because the method relies on quantitative data, conclusions are easily summarized for presentation to the public. It is important to note that correlational studies have low internal validity and cannot establish a cause-effect relationship between the variables. Correlational research is valuable in guiding researchers as to where more rigorous methods of research should be employed.

2. Experimental Research

Randomized Controlled Trial (RCT)

RCT is an experimental method in which investigators randomly assign eligible subjects into groups to receive or not receive one or more interventions that are being compared. It is considered the gold standard for establishing internal validity when the goal is to assess the cause-effect relationships between an intervention and its outcomes. RCT relies on statistical methods to ascertain the external validity of the intervention; that is, how well the results can be generalized from the research setting and participants to other populations and conditions.

Single-Subject Design (SSD)

This is an experimental method very effective in establishing internal validity with a single individual or a small group of individuals. SSD is limited in its ability to make statements of external validity.

Regression-Discontinuity Design (RDD)

This experimental method is a pretest-posttest comparison group design. The unique characteristic that separates this method from randomized controlled trial is how participants are assigned to the comparison groups. RDD assigns participants to comparison groups on the basis of a cutoff score on a pre-intervention measure, whereas RCT randomly assign participants to groups.

Quasi-Experimental Design (QED)

As an experimental design, QED is less accurate than RCT or RDD designs. In short, the lack of rigorous assignment introduces the potential for selection bias that weakens the ability to make strong claims of cause-effect.

How to establish the quality of research?

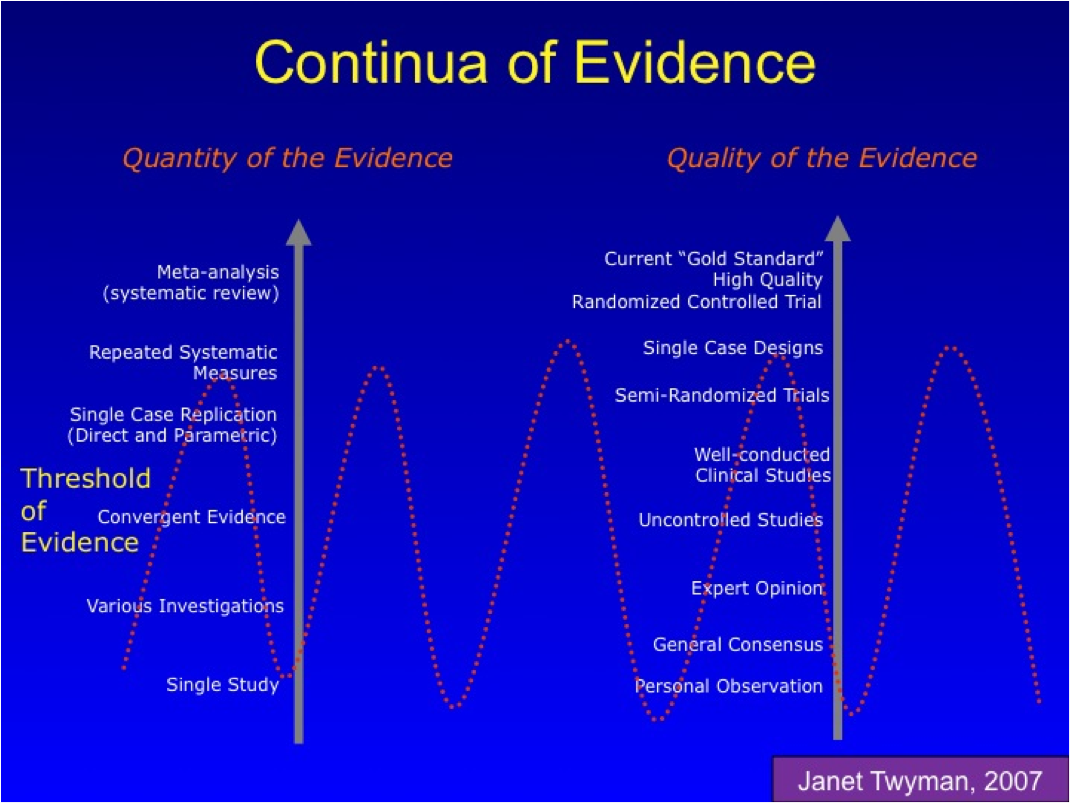

In an evidence-based culture, we interpret the results of research on continua of evidence to determine if the conclusions exceed the threshold we require for making smart decisions. The continuums consist of two qualities: the quantity of the evidence and the quality of the evidence.

Quality of Evidence

A cornerstone of an evidence-based culture is quality of evidence, or the confidence educators have in the conclusions derived from the study. In looking at the quality of a study, we are determining the strength of its evidence as measured by internal validity. As educators, we want to know how likely we are to achieve similar results when we implement the practice. This confidence increases in the quality continua as internal validity increases, beginning with non-experimental methods such as ones calling for personal observations and culminating in the gold standard of designs, the randomized controlled trial.

Quantity of Evidence

Another cornerstone is quantity of evidence; that is, having lots of studies that examine the same phenomenon. One study does not offer conclusive evidence of the effectiveness of a practice. Scientists gain confidence in their knowledge by replicating findings through multiple studies, conducted by independent researchers, over time to show that results were not a fluke or due to an error.

How to interpret results?

One of the great challenges for educators in using knowledge derived from research is how to wade through the volume of studies available as well as the information within a study. Fortunately, over the past 30 years two valuable tools have been developed to assist educators understand the available research: effect size and meta-analysis.

Effect Size

Rather than a research design or a method to establish cause-effect relationships, effect size is used to understand the results derived from research. It is a standardized measure to assess the magnitude of an intervention’s effect, which helps determine how powerful the impact of an intervention is.

Effect sizes range from minus to positive. A small effect is commonly defined as d = 0.2, medium = 0.5, and large = 0.8, but it is not uncommon to see effect sizes that exceed 1.0. The terms "small," "medium," and "large" are relative. Researchers accept the risk of using relative terms in the belief that they have more to gain than lose by offering a common conventional frame of reference when no better way to estimate the impact of a practice of intervention is available.

|

Cohen’s d * |

Effect Size |

|

Small |

d = 0.2 |

|

Medium |

d = 0.5 |

|

Large |

d = 0.8 |

* The accepted benchmark for effect size comes from Jacob Cohen (1988), a U.S. statistician and psychologist.

Meta-Analysis

A meta-analysis is a literature review that integrates individual studies of a single subject using statistical techniques to combine the results into an overall effect size. This is extremely helpful to educators in making sense of multiple studies on a single topic.

Meta-analysis is not itself a research design or an actual scientific study. Its purpose is only to act as a method to statistically examine scientific studies.