Latest News

December 16, 2019

On the Reality of Dyslexia. This paper assesses research on the topic of dyslexia. Willingham’s piece is in response to comments made by literacy researcher, Dick Allington, in which he questions the legitimacy of the label, dyslexia. Answering this question is more than an academic exercise as having a clearer understanding of dyslexia is crucial if educators are to understand why 10% of students struggle to master reading, the skill essential to success in academic learning. Willingham highlights the etiology of the disorder, and he concludes that the ability to read is the product of the home environment, instruction at school, and genetics within the child. Dyslexia is a problem in the child’s ability to successfully master the skills of reading and is closely related to fluency in language. Dyslexia is not like measles in which you are ill, or you aren’t. Dyslexia is more like high blood pressure where individuals fall on a bell curve. Falling somewhere on the bell curve is supported by the hypothesis that the disorder is the complex interaction between multiple causes. Although it does not have a single source, dyslexia is successfully remediated through evidence-based language and reading instruction.

Citation: Willingham, D. (2019). On the Reality of Dyslexia. Charlottesville, VA.http://www.danielwillingham.com/daniel-willingham-science-and-education-blog/on-the-reality-of-dyslexia?utm_source=feedburner&utm_medium=email&utm_campaign=Feed%3A+nbspDanielWillingham-DanielWillinghamScienceAndEducationBlog+%28Daniel+Willingham%27s+Science+and+Education+Blog%29.

Link: On the Reality of Dyslexia

December 16, 2019

PISA 2018 Results (Volume I): What Students Know and Can Do. Benchmark Indicators are critical tools to help education stakeholders track their education system’s performance over time, in comparison to other similar level education systems (state, national, international), and by student groups (ethnicity, disabilities, socioeconomic status, etc.). One of the most respected tools for benchmarking system performance is the Program for International Student Assessment (PISA), which tests 15-year-old-students across nearly 80 countries and educational systems in reading, mathematics, and science. The results from the most recent testing (2018) were just released. The report itself has an enormous amount of data. A summary of key findings follows.

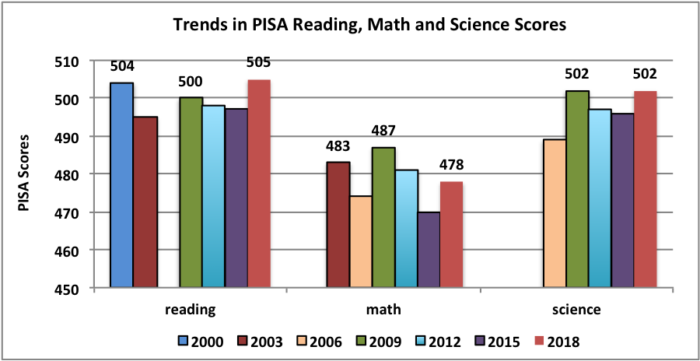

Performance Over Time

U.S. test performance, despite small fluctuations, has been virtually flat over the past twelve to eighteen years (depending on the subject area). The reading performance score was 504 in 2000 and 505 in 2018. Math performance got worse, dropping from 483 in 2003 to 478 in 2018. And science performance has remained the same over the last four testing periods, remaining at 502 between 2009 and 2018. Consistency is not inherently a bad thing depending on how well a system is performing. However, PISA data suggests that the U.S. system is significantly underperforming compared to other international systems (see following). Consistency is also a problem when one considers that unprecedented investments in school reform efforts during this time period (e.g. No Child Left Behind, School Improvement Grants, Race to the Top, and Every Student Succeeds) have failed to move the needle in any significant way.

Performance Compared to Other Countries

U.S performance in reading ranked thirteenth among participating nations in reading, thirty-eighth in math, and twelfth in science. These represent a slight improvement in rankings from the 2015 test results, but that is a reflection of several top performing nations had lower scores, not that the U.S. improved.

Performance Across Different Student Subgroups

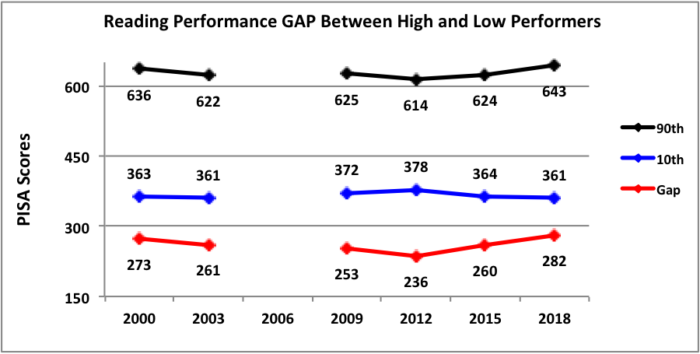

One of the biggest takeaway’s from this report is the growing inequity in performance between the high student performers and low. The following chart looks at the average scores of a gap between student scores in the highest percent of academic achievement (90%) and scores at the lowest (10%).

Reading scores of the highest performing students have increased over the lasts two tests from 614 to 643, while the scores of the lowest 10% have decreased from 378 to 361. The result is a widening gap between the top and lowest performing students. While improving the scores of the best performing students is a laudable achievement, an education system must serve all of its students in the interest of equity.

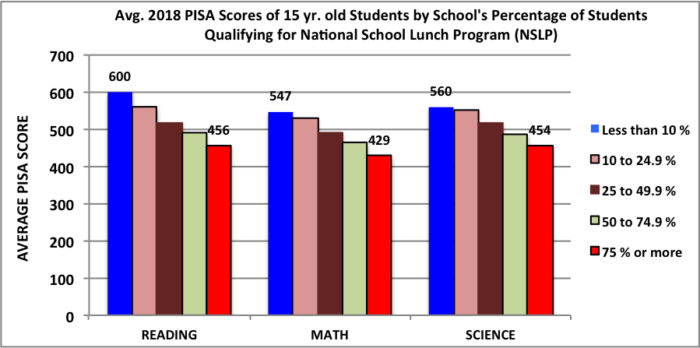

Further analysis of the data shows a correlation between student performance differences and their socioeconomic status (SES). One of the metrics used to determine SES is whether or not students qualify for the National School Lunch Program.

This data shows a direct correlation between a school’s reading scores and the SES of its student body. The more low SES students, the lower the PISA reading, math, and science scores.

It is almost impossible to document cause and effect with data at this level of analysis and control. Still, when making policy and program decisions, we must use the best available evidence. In this case, the best available evidence portrays an education system that, despite significant school improvement efforts, has shown little or no improvement over time, performs worse than a significant number of other nations’ education systems and continues to have inequitable results.

Citation. OECD (2019), PISA 2018 Results (Volume I): What Students Know and Can Do, PISA, OECD Publishing, Paris, https://doi.org/10.1787/5f07c754-en.

Web Address. https://www.oecd.org/pisa/publications/pisa-2018-results-volume-i-5f07c754-en.htm

December 16, 2019

Examining racial/ethnic disparities in school discipline in the context of student-reported behavior infractions. Research strongly supports the existence of bias in human beings. Discrepancies between how teachers handle behavior management incidents for students of color and white students has been a concern of education researchers for well over a decade. This paper looks at the disproportionality of consequences for disciplinary infractions between these groups of students. The researchers were interested in determining whether students of color would show similar rates of suspensions, office referrals, personal warnings from a teacher, or warnings about their behavior sent home based on ethnicity, as is the case for white students. Wegman’s study finds that African American students are less likely to receive warnings for behavior infractions than white peers, resulting in escalating consequences for students of color. The unequal handling of disciplinary actions reflects a pressing need for schools to address issues of implicit and explicit bias as a means to address this central issue in education.

Citation: Wegmann, K. M., & Smith, B. (2019). Examining racial/ethnic disparities in school discipline in the context of student-reported behavior infractions. Children and Youth Services Review, 103, 18-27.

Link: https://www.sciencedirect.com/science/article/pii/S0190740918311095

December 5, 2019

The Effect of Principal Behaviors on Student, Teacher and School Outcomes: A Systematic Review and Meta-Analysis of the Empirical Literature. This meta-analysis finds a positive relationship between school principals spending time on five commonly assigned roles and student achievement. These principal responsibilities are instructional management, internal relations, organizational management, administration, and external relations. The study finds that a principal cannot focus on a select few of the categories, but must carve out adequate time for each role. The need to be proficient across all leadership categories offers little comfort to U.S. principals who report average work-weeks of 58.6 hours (U.S. Department of Education National Center for Education Statistics, 2017). The paper recommends school principals be provided with additional resources if they are to adequately meet the needs of the students, teachers, and the community. Although these roles differ from the responsibilities researched by Viviane Robinson (goal expectations, strategic resourcing, teaching and curriculum, teacher development, and supportive environment), there does appear to be a significant overlap with those identified in the Liebowitz meta-analysis (Robinson, Lloyd, & Rowe, 2008).

Citation: Liebowitz, D. D., & Porter, L. (2019). The Effect of Principal Behaviors on Student, Teacher, and School Outcomes: A Systematic Review and Meta-Analysis of the Empirical Literature. Review of Educational Research, 89(5), 785-827.

Link: https://journals.sagepub.com/doi/abs/10.3102/0034654319866133

December 5, 2019

The Effect of Linguistic Comprehension Training on Language and Reading Comprehension. Children who begin school with proficient language skills are more likely to develop adequate reading comprehension abilities and achieve academic success than children who struggle with poor language skills in their early years. Individual language difficulties, environmental factors related to socioeconomic status, and having the educational language as a second language are all considered risk factors for language and literacy failure. This review considers whether language-supportive programs are effective. The research aims to examine the immediate and long-run effects of such programs on generalized measures of linguistic comprehension and reading comprehension. Examples of linguistic comprehension skills include vocabulary, grammar, and narrative skills.

The effect of linguistic comprehension instruction on generalized outcomes of linguistic comprehension skills is small in studies of both the overall immediate and follow-up effects. Analysis of differential language outcomes shows small effects on vocabulary and grammatical knowledge and moderate effects on narrative and listening comprehension. Linguistic comprehension instruction has no immediate effects of on generalized outcomes of reading comprehension. Only a few studies have reported follow-up effects on reading comprehension skills, with divergent findings.

Citation: Rogde, K., Hagen, Å. M., Melby-Lervåg, M., & Lervåg, A. (2019). The Effect of Linguistic Comprehension Training on Language and Reading Comprehension: A Systematic Review. Campbell Systematic Reviews.

Link: https://www.campbellcollaboration.org/better-evidence/linguistic-training-effect-on-language-and-reading-comprehension.html

November 15, 2019

A Powerful Hunger for Evidence-Proven Technology. The technology industry and education policymakers have touted the benefits of computers in learning. As a consequence, schools in the United States now spend more than $2 billion each year on education technology. But what are schools getting in return for this significant investment in technology learning? Robert Slavin examines the results from five studies designed to answer this question. Slavin concludes that the impact of technology-infused instruction on reading, mathematics, and science in elementary and secondary schools is very small. His analysis finds a study-weighted average across these five reviews to be a +0.05 effect size. This effect size appears to be an insignificant return on investment for such a substantial allocation of resources. Slavin concludes that how software is designed is at the heart of the problem. Commercial companies most often develop education technology. Given technology companies are market-driven, education software developers value profit margins, attractiveness, ease of use, low cost, trends, and fads, over evidence of efficacy. Slavin proposes a solution to improve upon this current model needs to include boosting the incentives to technology developers for creating products based on rigorous research and proven technology-based programs. Regardless of how best to solve the problem, educators need to take seriously this call to address this issue.

Citation: Slavin, R. (2019). A Powerful Hunger for Evidence-Proven Technology. Baltimore, MD: Robert Slavin’s Blog. https://robertslavinsblog.wordpress.com/2019/11/14/a-powerful-hunger-for-evidence-proven-technology/.

Link: https://robertslavinsblog.wordpress.com/2019/11/14/a-powerful-hunger-for-evidence-proven-technology/

November 14, 2019

Using Resource and Cost Considerations to Support Educational Evaluation: Six Domains. Assessing cost, along with the effectiveness of an initiative is common in public policy decision-making, but is frequently missing in education decision-making. Understanding the cost-effectiveness of an intervention is essential if educators are to maximize the impact of an intervention given limited budgets. Education is full of examples of practices, such as class-size reduction and accountability through high-stakes testing, that produce minimal results while consuming significant resources. It is vital for those making critical decisions to understand which practice is best suited to meet the needs of the school and the schools’ students that can be implemented using the available resources. The best way to do this is through the use of a cost-effectiveness analysis (CEA).

A CEA requires an accurate estimation of all added resources needed to implement the new intervention. Costs commonly associated with education interventions include; added personnel, professional development, classroom space, technology, and expenses to monitor effectiveness. The second variable essential to a CEA is the selection of a practice supported by research. In the past twenty years, a significant increase in the quality and quantity of research supporting different education practices has occurred. A CEA compares the extra expenditures required to implement a new intervention to current practices against targeted education outcomes. Examples of educational outcomes are standardized test scores, graduation rates, or student grades.

The focus of this essay is on which economic methods can complement and enhance impact evaluations. The authors propose the use of six domains to link intervention effectiveness to the best technique needed to determine which practice is the most cost-effective choice. The six domains outlined in the paper are outcomes, treatment comparisons, treatment integrity, the role of mediators, test power, and meta-analysis. This paper provides examples of how analyzing the costs associated with these domains can complement and augment practices in evaluating research in the field of education.

Citation: Belfield, C. R., & Brooks Bowden, A. (2019). Using Resource and Cost Considerations to Support Educational Evaluation: Six Domains. Educational Researcher, 48(2), 120-127.

Link: https://edre.uark.edu/_resources/pdf/er2018.pdf

November 12, 2019

A systematic review of single-case research on video analysis as professional development for special educators. Professional development is viewed as essential to providing teachers with the skills needed to be successful in the classroom. Research strongly supports the need to go beyond the typical in-service training that is commonly provided teachers. Coaching and feedback have been found to be very effective in increasing the likelihood that training will be implemented in classrooms. The use of video has been offered as a cost-effective way to trainers to provide feedback to teachers in training based on actual performance in classroom use of the new skill(s).

Citation: Morin, K. L., Ganz, J. B., Vannest, K. J., Haas, A. N., Nagro, S. A., Peltier, C. J., … & Ura, S. K. (2019). A systematic review of single-case research on video analysis as professional development for special educators. The Journal of Special Education, 53(1), 3-14.

Link: https://www.researchgate.net/publication/328538866_A_Systematic_Review_of_Single-Case_Research_on_Video_Analysis_as_Professional_Development_for_Special_Educators

November 8, 2019

Training Teachers to Increase Behavior-Specific Praise: A Meta-Analysis. This research examines the literature supporting teacher training in the use of behavior-specific praise. One of the most common problems confronting classroom teachers concerns managing student behavior. Praise is a straight forward cost-effective intervention used to increase appropriate behavior and decrease troublesome student conduct. In the absence of training, teachers fail to adequately use behavior-specific praise and frequently fall back on the use of negative statements to control student conduct. The current knowledge base finds this approach to be counter-productive.

On the other hand, rigorous research indicates that when teachers receive training in the use of praise, disruptive behavior decreases, and appropriate conduct increases. This meta-analysis examined 28 single subject designed studies. The authors found an aggregate large effect size for teachers who received training increase the use of behavior-specific praise with students.

Citation: Zoder-Martell, K. A., Floress, M. T., Bernas, R. S., Dufrene, B. A., & Foulks, S. L. (2019). Training Teachers to Increase Behavior-Specific Praise: A Meta-Analysis. Journal of Applied School Psychology, 1-30.

Link: https://eric.ed.gov/?id=EJ1226912

November 7, 2019

What Do Surveys of Program Completers Tell Us About Teacher Preparation Quality? Identifying which teacher preparation programs produce highly qualified teachers is understood to be a means to improve the effectiveness of teacher preparation programs (TTP). One proposed method for measuring TTP effectiveness is surveying recent graduate’s satisfaction with the training received. Research suggests that analysis of the surveys correlate satisfaction with teacher classroom performance, evaluation ratings, and retention data. If correct, this data offers schools a wealth of information to aid in deciding which pre-service programs to focus recruiting efforts. It also suggests that surveys can provide data for holding TTP accountable.

But much of the available research lacks sufficient rigor. This paper uses survey data from teachers in the state of North Carolina to gauge graduate’s satisfaction with TTP training to raise the validity and reliability of the study’s findings. The study concludes perceptions of preparation programs are modestly associated with the effectiveness and retention of first and second-year teachers. The researchers find, on average, those who feel better prepared to teach are more effective and more likely to remain in teaching. These results indicate that surveys of preparation program graduate satisfaction be monitored to assure validity and reliability of polling, given the interest accreditation bodies, state agencies, and teacher preparation programs show in using this data for high stakes decision making. The results also imply that surveys alone do not provide sufficient data to identify which programs offer the best teacher training.

Citation: Bastian, K. C., Sun, M., & Lynn, H. (2018). What do surveys of program completers tell us about teacher preparation quality? Journal of Teacher Education, November 2019.

Link: https://journals.sagepub.com/doi/abs/10.1177/0022487119886294