View PDF of overview

Treatment Integrity Strategies

Citation: States, J., Detrich, R. & Keyworth, R. (2017). Treatment Integrity Strategies. Oakland, CA: The Wing Institute. https://www.winginstitute.org/effective-instruction-treatment-integrity-strategies.

Student achievement scores in the United States remain stagnant despite repeated attempts to reform the system. New initiatives promising hope arise, only to disappoint after being adopted, implemented, and quickly found wanting. The cycle of reform followed by failure has had a demoralizing effect on schools, making new reform efforts more problematic. These efforts frequently fail because implementing new practices is far more challenging than expected and require that greater attention be paid to how initiatives are implemented (Fixsen, Blase, Duda, Naoom, & Van Dyke, 2010).

Inattention to treatment integrity is a primary factor of failure during implementation. Treatment integrity is defined as the extent to which an intervention is executed as designed, and the accuracy and consistency with which the intervention is implemented (Detrich, 2014; McIntyre, Gresham, DiGennaro, & Reed, 2007).

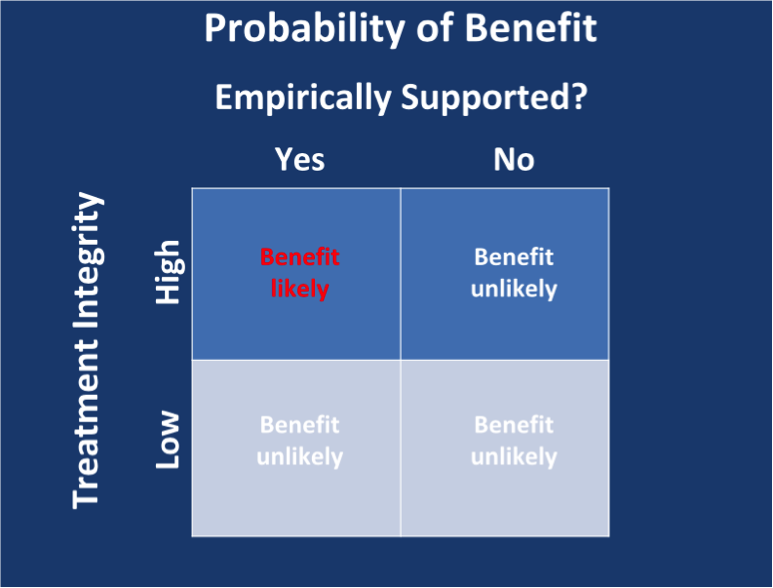

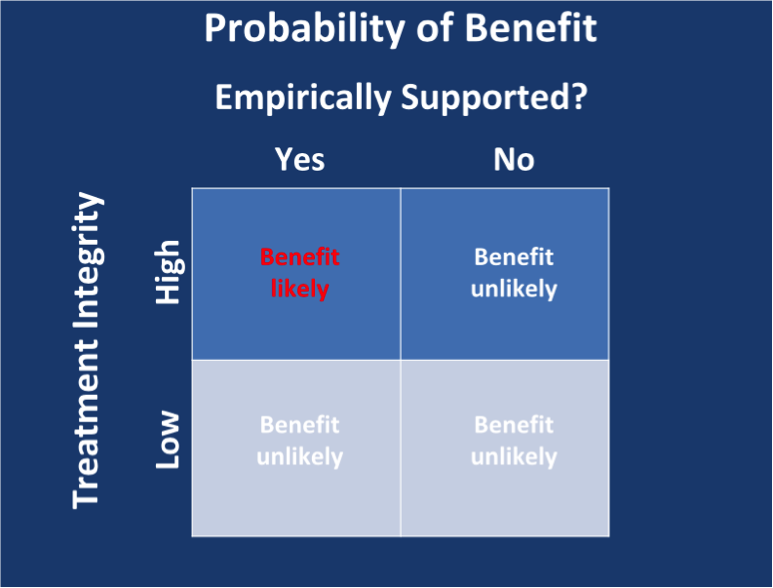

Figure 1: Benefit From Evidence-Based Practices Implemented With Integrity

Figure 1 shows the relationship between implementation of empirically supported interventions and treatment integrity. If an empirically supported intervention is implemented with a high degree of treatment integrity, then there is high probability of benefit to the student. If that same intervention is implemented poorly, then the probability of benefit is low. If an intervention is implemented with high integrity but does not have empirical support, then the probability of benefit is still low because the intervention is ineffective. This is similar to taking placebo pills in a medication study. Even if the placebo is taken exactly as prescribed, it is not likely to produce a medically important benefit. Implementing an unsupported intervention poorly is not likely to produce benefit either, because both empirical support and high integrity are absent.

When innovations are not implemented as conceived, it should not be a surprise that anticipated benefits are not forthcoming. This raises the possibility that the issue is with the quality of implementation and not with the practice itself (Fixsen, Naoom, Blase, Friedman, & Wallace, 2005). Research suggests that practices are rarely implemented as designed (Hallfors & Godette, 2002). Despite solid evidence of a relationship between effectiveness of a practice and treatment integrity, it has only been in the past 20 years that systematic efforts have focused on how practitioners can influence the quality of treatment integrity (Durlak & DuPre, 2008; Fixsen et al., 2010). Measuring treatment integrity in schools continues to be rare, but it is the necessary place to begin efforts to improve treatment integrity. The question remains, what strategies can educators employ to increase the likelihood that practices will be implemented as designed?

Two Types of Treatment Integrity Strategies

Strategies designed to increase treatment integrity fall into two main categories: antecedent-based strategies and consequence-based strategies.

Antecedent-based strategies involve any setting event or environmental factor that happens prior to implementing the new practice, which is intended to increases treatment integrity. These strategies also include actions designed to eliminate or reduce the impact of setting events or environmental considerations that impede treatment integrity. An example of an antecedent-based strategy that may increase the chances of the new practice being implemented as designed is staff development. An example of an antecedent-based strategy used to decrease undesirable events or environmental conditions is action taken to mitigate staff opposition to the new practice.

Consequence-based strategies, on the other hand, follow the implementation of a new practice and are designed to increase or maintain high treatment integrity in the future. These strategies reinforce the implementation of the elements of a new practice, thus improving the chance the practice will produce future desired outcomes. Consequence-based strategies may also be used to eliminate or reduce the impact of events and environmental factors that may interfere with the successful execution of the new practice in the future. An example of a consequence-based strategy that may increase future integrity is positive feedback for faithfully implementing the new practice elements. An example of a consequence-based strategy to eliminate or reduce factors that interfere with the successful execution of the new practice is corrective feedback coupled with coaching.

Antecedent-based strategies

Antecedent efforts begin well before rolling out a new innovation. They start with actively engaging staff to obtain their buy-in, an essential step before implementing a change process. Rogers (2003) has suggested that the adoption and implementation of new practices is a social process and concludes that innovations will be adopted and implemented to the extent that they

- are compatible with the beliefs, values, and previous experience of individuals within a social system.

- solve a problem for the teacher/staff.

- have a relative advantage over the current practice.

- gain the support of opinion leaders.

Assessment is a key antecedent strategy. An assessment must examine the readiness of the site implementing the practice, evaluate staff development needs, and appraise the available resources required for implementing the practice. Conducting an initial assessment to gauge how closely the new practice will align with the culture of the school or classroom is especially important. It is a fact that change does not come easily. Studies suggest interventions that slightly modify existing routines and practices are more likely to succeed, and large-scale shifts are more likely to be rejected (Detrich, 2014). Too often, new practices are mandated from above without regard for any negative impact on the teachers who must implement them. Measuring the degree of contextual fit allows the school administrator to identify areas of resistance and recognize how to adapt practices to better match the current values and skills of staff. According to Horner, Blitz, and Ross (2104), “Contextual fit is the match between the strategies, procedures, or elements of an intervention and the values, needs, skills, and resources of those who implement and experience the intervention.”

An example of a model that values staff buy-in is Positive Behavioral Interventions and Supports (PBIS), a research-based, schoolwide system approach created to improve school climate and to create safer and more effective schools. PBIS is currently in more than 23,000 schools throughout the United States. Consent is required from 80% of staff before the PBIS framework is introduced into a school. PBIS finds this essential for establishing and maintaining the degree of treatment integrity necessary for the effective implementation of the program’s practices (Horner, Sugai, & Anderson, 2010). Achieving a threshold of support significantly increases the probability of an innovation’s sustainable implementation. Increasing staff motivation does not ensure treatment integrity, but it does increase the chances of success.

Another proven strategy for increasing staff acceptance of change is to provide teachers with choices about which practices they believe are best suited for their setting (Detrich, 1999; Hoier, McConnell, & Pallay, 1987). For a detailed analysis of the strategies and the procedures needed for effective adoption of new practices visit the National Implementation Research Network website and the organization’s research synthesis on the topic (Fixsen et al., 2005).

After staff commitment has been achieved, personnel must be effectively trained in the skills and supporting procedures required for the new practice. Staff development is deemed important, as evidenced by the American education system’s expenditure of $18,000 on average per teacher annually (Jacob & McGovern, 2015). Unfortunately, research suggests that schools receive little return for the substantial time and resources schools spend in teacher development (Garet et al., 2008). Research reveals most staff development in schools consists of staff in-service or professional development workshops. Unfortunately, studies also find these sessions to be ineffective (Joyce & Showers, 2002).

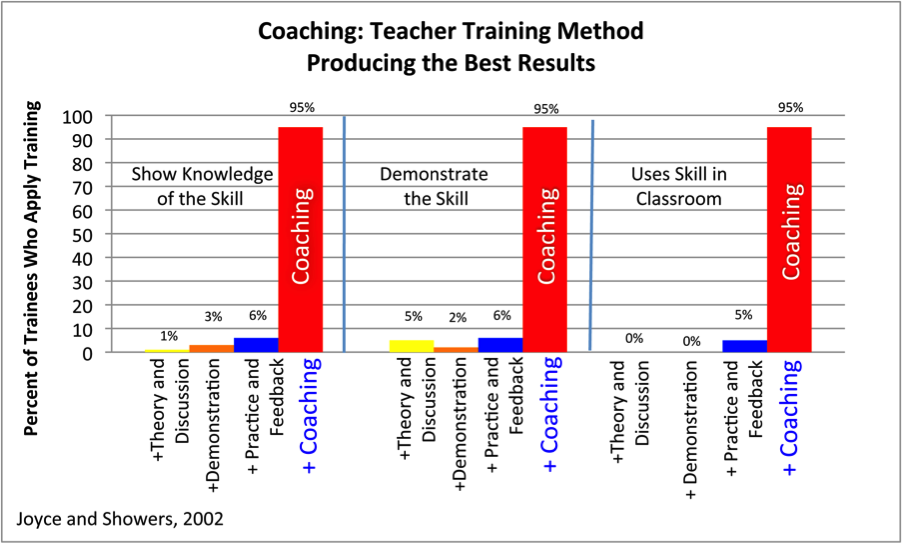

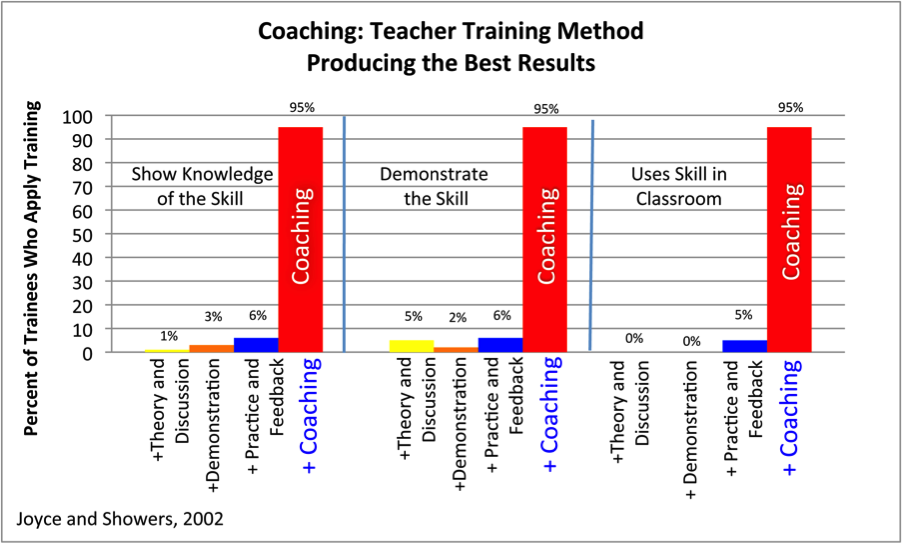

Figure 2: The Influence of Coaching on Whether a New Skill Is Used in the Classroom

Joyce and Showers (2002) found that when teachers receive workshop training

- 0% of the teachers transfer a new practice to the classroom after instruction in the theory.

- 0% of the teachers transfer a new practice to the classroom after instruction in the theory and observing a demonstration of the practice.

- 5% of the teachers transfer a new practice to the classroom after theory, demonstration, and rehearsal.

- 95% of the teachers transfer a new practice to the classroom after theory, demonstration, rehearsal, and coaching.

Additionally, only coached teachers were able to adapt strategies and overcome obstacles that arose during implementation.

To reverse the dependence on workshops, training should organize around coaching and the use of written manuals that clearly and objectively outline the performance required of the teacher (Kauffman, 2012; Knight, 2013). The most effective staff development, resulting in consistent implementation of a practice, involves working with actual students in a classroom as opposed to didactic presentations or workshop simulations (Reinke, Sprick, & Knight, 2009). Evidence suggests that ongoing coaching is necessary before teachers consistently use newly taught skills in the classroom, and it is fundamental if the teachers are to sustain the desired degree of treatment integrity to maximize desired outcomes.

Consequence-based strategies

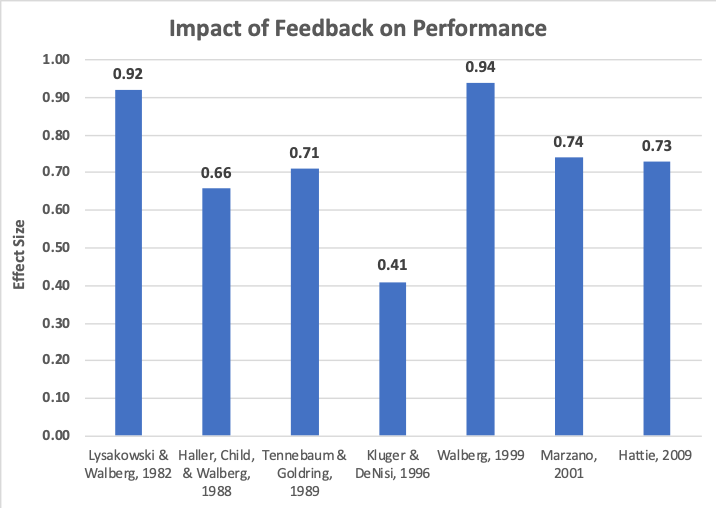

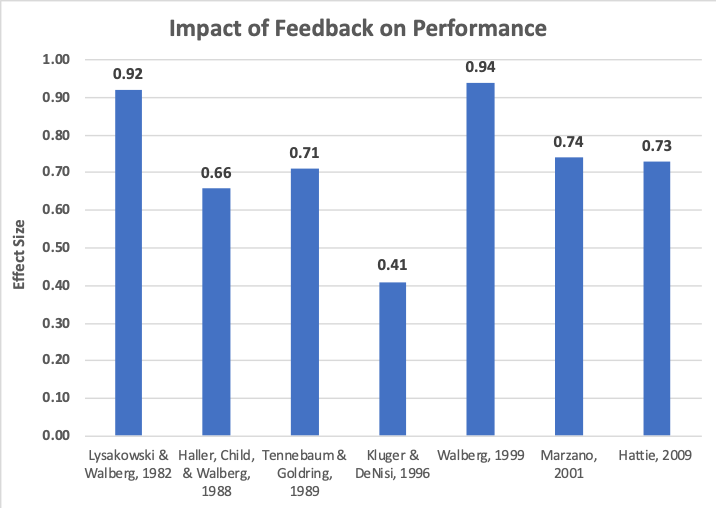

Studies find that treatment integrity declines almost immediately after training. It appears that acquiring staff commitment and providing training aren’t sufficient to maintain treatment integrity (Duhon, Mesmer, Gregerson, & Witt, 2009; Noell et al., 2000). Avoiding the near certainty of decline requires additional components, mainly, monitoring implementation and providing feedback to the teacher. Ongoing systematic observations offer the best opportunities for trainers to know what is working and what is not (Wasik & Hindman, 2011). The applicable data suggest that performance feedback is not only important but essential for establishing and maintaining treatment integrity. Various authors have evaluated the impact of performance feedback on teacher behavior (see Figure 3).

Figure 3: Effects of Feedback on Performance

Performance feedback, usually based on direct sampling of performance, is a requisite feature of any effort to achieve or maintain treatment integrity. It has been demonstrated to reduce the degradation of integrity and is useful as a means of reestablishing integrity after it has declined (Duhon et al., 2009; Witt, Noell, LaFleur, & Mortenson, 1997). Research suggests that daily feedback is most powerful, although weekly feedback does improve performance (Detrich, 2014). Follow-up meetings that take place after observations and that include specific data reviewed with the staff are most effective. Individual feedback sessions following observations produced better outcomes than telephone calls, emails, or written handouts (Easton & Erchul, 2011). Feedback provides opportunities to deliver accurate information that is both positive and corrective. Annual or bi-annual feedback used for high-stakes formal evaluation is less effective than constructive feedback focused on skills improvement and delivered throughout the school year; although acceptable, principal feedback is significantly less preferred by teachers (Hill & Grossman, 2013). Teachers are most receptive to feedback that is nonthreatening and focuses on improving skills and the practices they are being taught. Moreover, comments perceived as direct criticism of the person have consistently shown to produce poorer results (Kluger & DeNisi, 1996).

Conclusion

The increasing pressure on schools to improve student achievement has resulted in teachers being bombarded with a myriad of school reforms. As educators look for solutions to stagnant student performance, demand has increased for schools to embrace evidence-based practices. These practices, vetted using rigorous research methods, are intended to increase confidence in a causal relationship between a practice and student outcomes. Practices must be implemented as they were designed if the predicted outcomes are to be achieved. Educators increasingly embrace the perspective that innovations must be implemented with integrity (Detrich, 2014).

Implementation with high levels of treatment integrity does not come easily and does have costs. Sufficient research and practice-based evidence strongly suggest successful implementation of reform is a complex process requiring the investment of resources, time, and money. Key components of effective implementation must address antecedent- and consequence-based strategies shown to be necessary for sustainable implementation. Paramount is the commitment to measure treatment integrity on an ongoing basis. Principals need to effectively arrange contingencies so that teachers accept this notion, and teachers need to adopt and sustain strategies that support implementation of practices with treatment integrity. Failure to implement with integrity decreases the likelihood that new practices will produce meaningful results. Often, past reform efforts have ignored the issue of treatment integrity, resulting in viable reforms being abandoned when they may actually have worked if implemented with integrity.

Citations

Detrich, R. (1999). Increasing treatment fidelity by matching interventions to contextual variables within the educational setting. School Psychology Review, 28(4), 608–620.

Detrich, R. (2014). Treatment integrity: Fundamental to education reform. Journal of Cognitive Education and Psychology, 13(2), 258–271.

Detrich, R. (2015). Treatment integrity: A wicked problem and some solutions. Missouri Association for Behavior Analysis 2015 Conference. http://winginstitute.org/2015-MissouriABA-Presentation-Ronnie-Detrich

Duhon, G. J., Mesmer, E. M., Gregerson, L., & Witt, J. C. (2009). Effects of public feedback during RTI team meetings on teacher implementation integrity and student academic performance. Journal of School Psychology, 47(1), 19–37.

Durlak, J. A., & DuPre, E. P. (2008). Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology, 41(3–4), 327–350.

Easton, J. E., & Erchul, W. P. (2011). An exploration of teacher acceptability of treatment plan implementation: Monitoring and feedback methods. Journal of Educational and Psychological Consultation, 21(1), 56-77.

Fixsen, D. L., Blase, K. A., Duda, M., Naoom, S. F., & Van Dyke, M. (2010). Sustainability of evidence-based programs in education. Journal of Evidence-Based Practices for Schools, 11(1), 30–46.

Fixsen, D. L., Naoom, S. F., Blase, K. A., Friedman, R. M., & Wallace, F. (2005). Implementation research: A synthesis of the literature (FMHI Publication No. 231). Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, and the National Implementation Research Network.

Garet, M. S., Cronen, S., Eaton, M., Kurki, A., Ludwig, M., Jones, W., ... Zhu, P. (2008). The impact of two professional development interventions on early reading instruction and achievement. NCEE 2008-4030. Washington, DC: National Center for Education Evaluation and Regional Assistance.

Hallfors, D., & Godette, D. (2002). Will the “principles of effectiveness” improve prevention practice? Early findings from a diffusion study. Health Education Research, 17(4), 461–470.

Hill, H., & Grossman, P. (2013). Learning from teacher observations: Challenges and opportunities posed by new teacher evaluation systems. Harvard Educational Review, 83(2), 371-384.

Hoier, T. S., McConnell, S., & Pallay, A. G. (1987). Observational assessment for planning and evaluating educational transitions: An initial analysis of template matching. Behavioral Assessment, 9(1), 5-19.

Horner, R., Blitz, C., & Ross, S. (2014). The importance of contextual fit when implementing evidence-based interventions. Washington, DC: U.S. Department of Health and Human Services, Office of the Assistant Secretary for Planning and Evaluation. https://aspe.hhs.gov/system/files/pdf/77066/ib_Contextual.pdf

Horner, R. H., Sugai, G., & Anderson, C. M. (2010). Examining the evidence base for school-wide positive behavior support. Focus on Exceptional Children, 42(8), 1.

Jacob, A., & McGovern, K. (2015). The mirage: Confronting the hard truth about our quest for teacher development. Brooklyn, NY: TNTP. https://tntp.org/assets/documents/TNTP-Mirage_2015.pdf.

Joyce, B. R., & B. Showers (2002). Student achievement through staff development. Alexandria, VA: Association for Supervision and Curriculum Development (ASCD) Books.

Kauffman, J. M. (2012). Science and the education of teachers. In: Education at the Crossroads: The State of Teacher Preparation (Vol. 2, pp. 47-64). Oakland, CA: The Wing Institute.

Kluger, A. N., & DeNisi, A. (1996). The effects of feedback interventions on performance: A historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychological Bulletin, 119(2):254–284.

Knight, J. (2013). Focus on teaching: Using video for high-impact instruction. (Pages 8-14). Thousand Oaks, CA: Corwin.

McIntyre, L. L., Gresham, F. M., DiGennaro, F. D., & Reed, D. D. (2007). Treatment integrity of school‐based interventions with children in the Journal of Applied Behavior Analysis 1991–2005. Journal of Applied Behavior Analysis, 40(4), 659–672.

Noell, G. H., Witt, J. C., LaFleur, L. H., Mortenson, B. P., Ranier, D. D., & LeVelle, J. (2000). Increasing intervention implementation in general education following consultation: A comparison of two follow-up strategies. Journal of Applied Behavior Analysis, 33(3), 271–284.

Reinke, W. M., Sprick, R., & Knight, J. (2009). Coaching classroom management. In: J. Knight (Ed.), Coaching: Approaches and perspectives (pp. 91-112). Thousand Oaks, CA: Corwin Press.

Rogers, E. M. (2003). Diffusion of innovations (5th ed.). New York, NY: Free Press.

Wasik, B. A., & Hindman, A. H. (2011). Improving vocabulary and pre-literacy skills of at-risk preschoolers through teacher professional development. Journal of Educational Psychology, 103(2), 455.

Witt, J. C., Noell, G. H., LaFleur, L. H., & Mortenson, B. P. (1997). Teacher use of interventions in general education settings: Measurement and analysis of the independent variable. Journal of Applied Behavior Analysis, 30(4), 693–696.

View PDF of overview