How does performance feedback affect the way teachers carry out interventions?

Why is this question important? In an era in which educators are mandated to use scientifically supported interventions to solve academic and social behavior problems, it is important that interventions be implemented with enough quality to ensure benefit (treatment integrity). The effectiveness of an intervention depends on implementation as prescribed in the research. There is considerable evidence to suggest that, on its own, training on how to put a specific procedure into practice does not ensure high-quality implementation (Mortenson & Witt, 1998; Noell, Witt, Gilbertson, Ranier, & Freeland, 1997). Thus, it is necessary to develop approaches that will ensure proper implementation. One possible approach is performance feedback, which has been well researched in business and industry but less so in education.

See further discussion below.

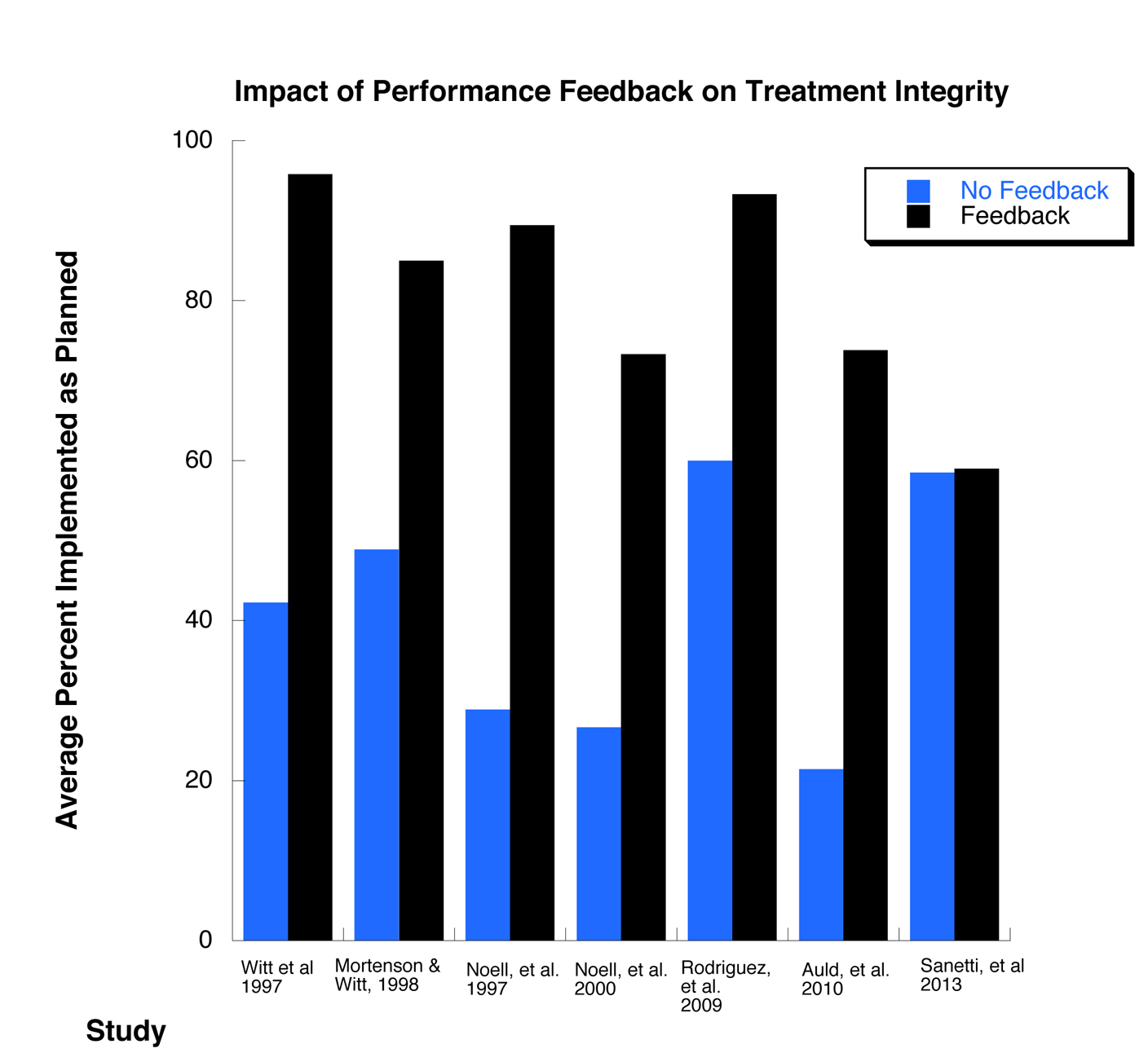

Results: In every study but one (see graph), performance feedback resulted in significant increases in the quality of implementation. This was the case even though interventions ranged from academic instructional programs to behavior management; experience of educators ranged from pre-service teachers to teachers with many years of experience; and settings were across the K–12 continuum.

The one exception to the generally positive outcomes was the Sanetti, Fallon, and Collier-Meek (2013) study. In all the other studies external consultants (the researchers directing the studies) delivered the performance feedback, whereas in the Sanetti study internal consultants (existing school staff working, such as school psychologists) provided the feedback. Sanetti et al. noted that, as the study progressed, the internal consultants often veered from the protocols and did not give proper feedback.

Implications: It is clear that performance feedback can increase the quality of implementation in educational settings, but the Sanetti study raises important questions about its scalability. If internal consultants cannot effectively deliver performance feedback on a regular basis, then it is not a viable option for schools. The Sanetti data highlight the importance of a systemic approach to improving the quality of implementation. Without a comprehensive systemwide approach, it is unlikely that the promised benefits will be achieved. These data suggest that considerable research is needed to identify effective and efficient strategies for ensuring high-quality implementation.

Study Description: The studies for this analysis were selected if they met the following criteria:

- The study occurred in public schools.

- Single participant design was the methodology used for analysis of effects.

- Treatment integrity rather than student performance was the primary focus of the study.

- Performance feedback was the primary intervention evaluated to determine the effects on treatment integrity, and it was not combined with other strategies.

- A post-training baseline evaluated the effects of training prior to introducing performance feedback, making it possible to sort out the effects of training from the effects of performance feedback.

For the purposes of analysis, the last three data points (in this case, the last three days' performance) from the post-training baseline and from the performance feedback phases for each participant in each study were recorded. The data points in each phase of a study were then summed across participants and an average was derived for the baseline and intervention phases.

Definitions: Performance feedback: Giving feedback to a person about compliance with a specific protocol such as a checklist or job aid. Usually, the feedback is based on data and, frequently but not necessarily, direct observation of performance.

Single participant design: An experimental approach that analyzes the performance of a few individuals over time. It relies on repeated measures of the performance of interest and systematically changes one variable at a time.

Treatment integrity: Implementing an intervention as prescribed.

Citation:

Auld, R. G., Belfiore, P. J., & Scheeler, M. C. (2010). Increasing pre-service teachers' use of differential reinforcement: Effects of performance feedback on consequences for student behavior. Journal of Behavioral Education, 19(2), 169–183.

Mortenson, B. P., & Witt, J. C. (1998). The use of weekly performance feedback to increase teacher implementation of a prereferral academic intervention. School Psychology Review, 27(4), 613–627.

Noell, G. H., Witt, J. C., Gilbertson, D. N., Ranier, D. D., & Freeland, J. T. (1997). Increasing teacher intervention implementation in general education settings through consultation and performance feedback. School Psychology Quarterly, 12(1), 77–88.

Noell, G. H., Witt, J. C., LaFleur, L. H., Mortenson, B. P., Ranier, D. D., & LeVelle, J. (2000). Increasing intervention implementation in general education following consultation: A comparison of two follow‐up strategies. Journal of Applied Behavior Analysis, 33(3), 271-284.

Rodriguez, B. J., Loman, S. L., & Horner, R. H. (2009). A preliminary analysis of the effects of coaching feedback on teacher implementation fidelity of First Step to Success. Behavior Analysis in Practice, 2(2), 11–21.

Sanetti, L. M. H., Fallon, L. M., & Collier-Meek, M. A. (2013). Increasing teacher treatment integrity through performance feedback provided by school personnel. Psychology in the Schools, 50(2), 134–150.

Witt, J. C., Noell, G. H., LaFleur, L. H., & Mortenson, B. P. (1997). Teacher use of interventions in general education settings: Measurement and analysis of the independent variable. Journal of Applied Behavior Analysis, 30(4), 693- 696.