How well are Interventions Implemented in Educational Settings?

Why is this question important? It is well established that empirically supported interventions are most likely to benefit students when they are implemented with a high level of treatment integrity (Detrich, 2014). It is equally well established that the effects of research-based interventions are smaller when implemented in educational settings by staff assigned to the program (Shoenwald & Hoagwood, 2001). OneWhat is not well established is how well educators generally implement empirically supported interventions. The question is important because if low treatment integrity is one of the primary reasons for diminished benefit from empirically supported interventions, then steps must be taken to ensure that those interventions are implemented well. In short, an effective program will be less effective if it is not implemented effectively. The studies reviewed here do not provide a definitive answer to the question of how well interventions are implemented, but they do suggest an answer.

See further discussion below.

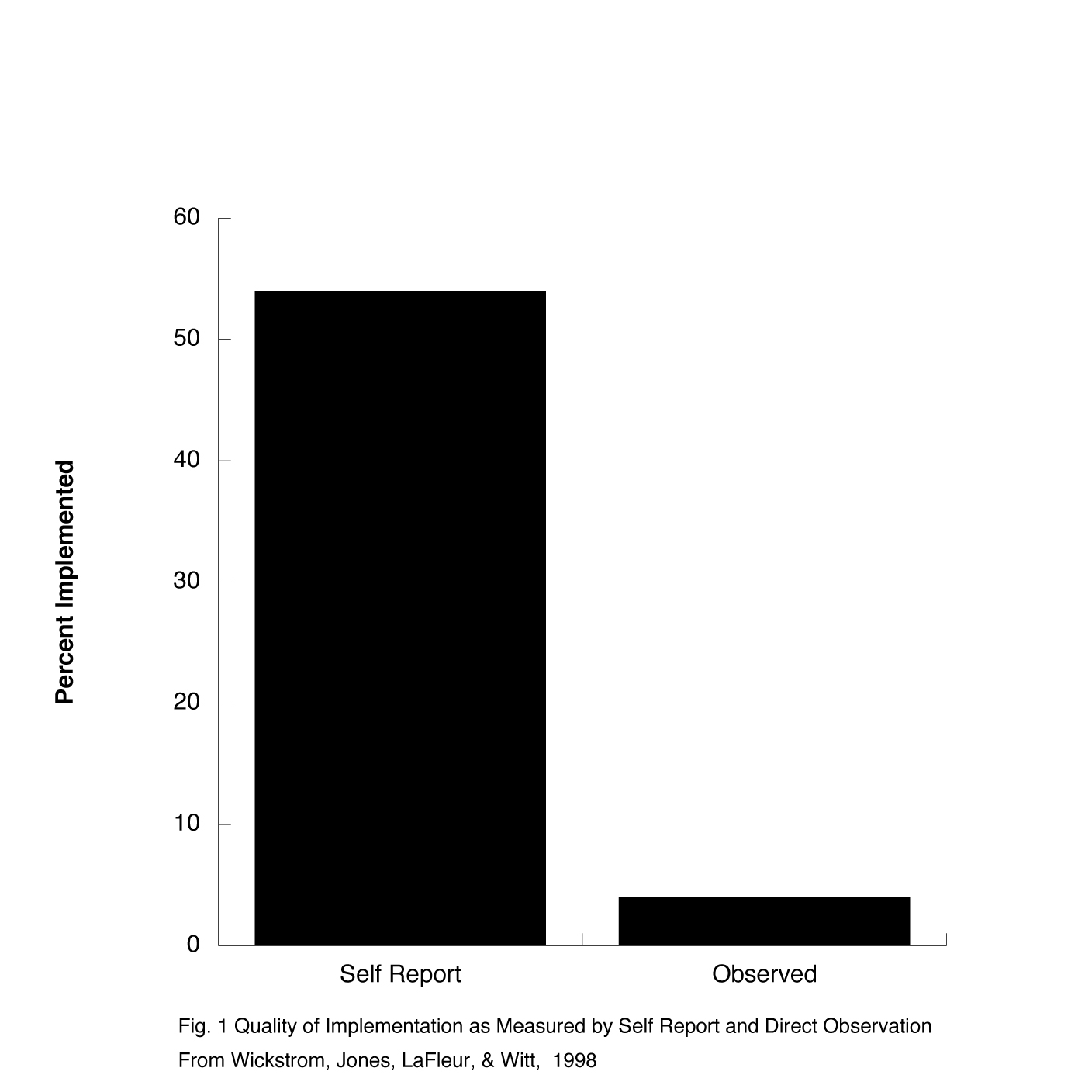

Results: Figure 1 shows the difference between the average level of implementation reported by teachers (54%) and the average level based on independent, direct observation of the teachers implementing the intervention (4%), reported by Wickstrom et al. (1998), Two points are striking about these data. First, the observed level of implementation is only 4%, even though the teachers were trained to 100% accuracy. This is consistent with other data suggesting that training alone is insufficient to ensure effective implementation and that ongoing performance feedback is necessary to maintain adequate levels of intervention implementation (Mortenson & Witt, 1998; Noell, Witt, Gilbertson, Ranier, & Freeland, 1997).

A second important feature of these data is that self-reporting is an inadequate method for assessing the quality of implementation. While very time efficient, it does not satisfy the requirement to accurately reflect the accuracy of implementation. Independent, direct observation is likely to produce the most accurate measure of implementation, but is largely impractical in public schools because it requires resources that are not available to make it a viable option. The challenge for educators and implementation scientists is to find time efficient and accurate measures of implementation.

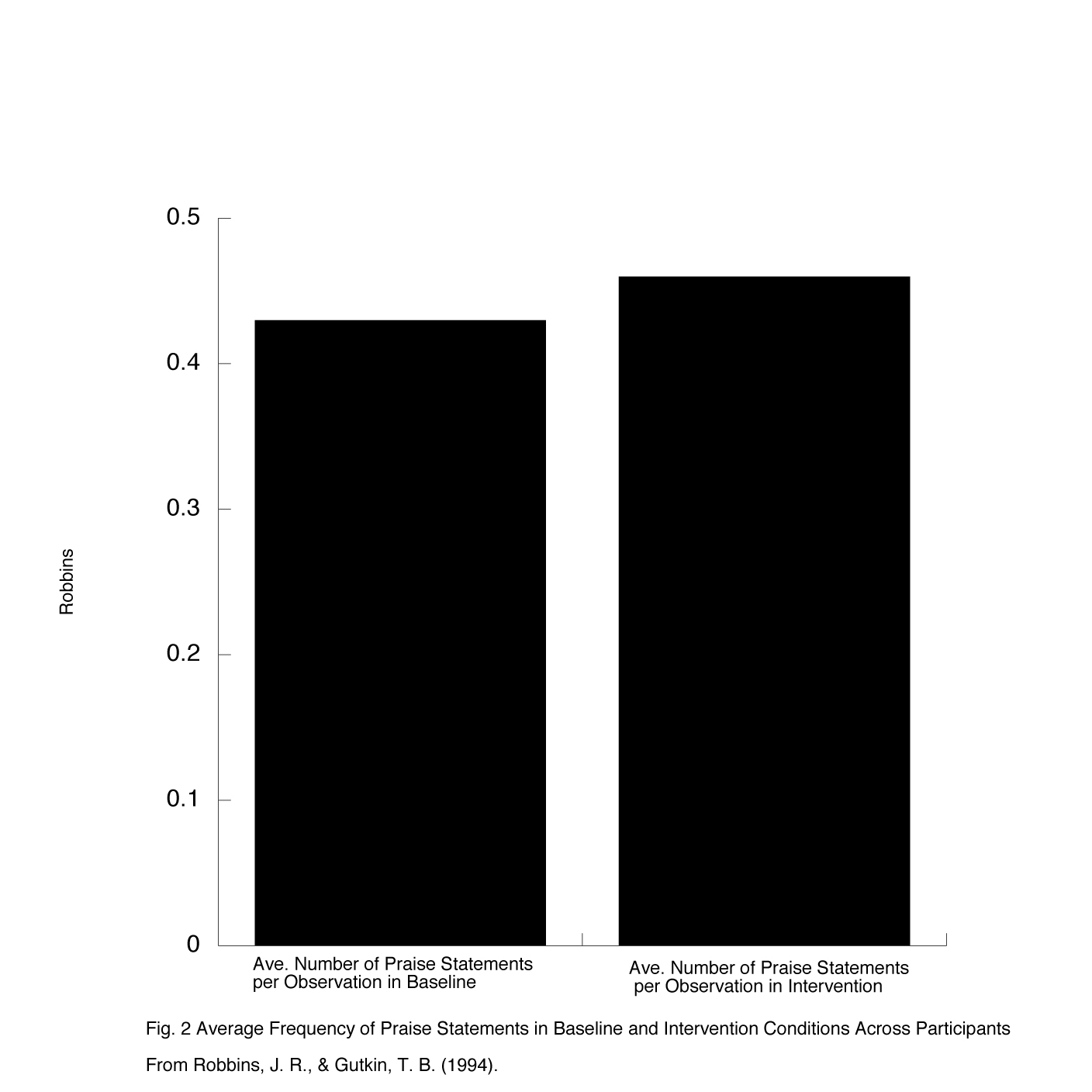

Figure 2 shows the data from the Robbins and Gutkin (1994) study. The data reflect the average frequency of praise statements during 30-minute observations across baseline and intervention conditions. During the baseline phase, the identified student was praised 0.43 times per session during the baseline phase, and 0.46 times per session during the intervention phase. One interesting aspect of this study is that all teachers reported that they had increased the frequency of praising during the intervention phase. This finding is consistent with the data from the Wickstrom et al. (1998) study, which showed that self-reporting was not a reliable method for assessing quality of implementation (Fig. 1). To put the obtained data in perspective, during the baseline phase students were praised approximately one time in three observation sessions. In the intervention phase, they were slightly more likely to be praised but still about once every three sessions. It is very doubtful that changes of such a small magnitude would have any discernable impact on student behavior.

Implications: Taken together, these studies suggest that if empirically supported interventions are to be effective, alternative approaches giving greater weight to the quality of implementation should be developed. Training and instructions alone are insufficient. Not only are the programs likely to be ineffective, but they are implemented at significant cost (purchasing the intervention, training individuals to implement it, and so on). In effect, interventions are never actually implemented so there is no benefit to offset the costs of implementation. This situation is indefensible in a time of scarce financial resources.

Failure to obtain benefit from empirically supported interventions is likely to lead to cynicism that any intervention can be effective. The ultimate cost of failure to implement interventions adequately is that students do not benefit, resulting in lost educational opportunities. Clearly, there are resource costs associated with ensuring that interventions are well implemented, but the prospect of actual benefit offsets those costs. Currently, education is experiencing high cost with limited offsetting benefit. Perhaps it is time to invest in quality implementation.

Study Description: The data for this review come from two separate studies. The first study, by Wickstrom, Jones, LaFleur, and Witt (1998), asked 29 teacher participants either to select a research-based intervention to implement in their classrooms or to have one prescribed by a consultant. The teachers were instructed on how to implement the selected intervention. They self-reported on the accuracy of their implementation. In addition, independent observers measured the accuracy of implementation by the teachers. The second study, by Robbins and Gutkin (1994), measured the frequency of praise statements directed to a student that had been identified as having difficulty attending in class during 30-minute observations prior to intervention (to determine a baseline) and during intervention. During the baseline phase, teachers were observed to determine the frequency of praising. In the intervention phase, teachers were explicitly instructed to increase the frequency of praising.

Citation:

Detrich, R. (2014). Treatment integrity: Fundamental to education reform. Journal of Cognitive Education & Psychology, 13(2), 258–271.

Hawken, L. S., & Horner, R. H. (2003). Evaluation of a targeted intervention within a schoolwide system of behavior support. Journal of Behavioral Education, 12(3), 225–240.

Mortenson, B. P., & Witt, J. C. (1998). The use of weekly performance feedback to increase teacher implementation of a prereferral academic intervention. School Psychology Review, 27, 613–627.

Noell, G. H., Witt, J. C., Gilbertson, D. N., Ranier, D. D., & Freeland, J. T. (1997). Increasing teacher intervention implementation in general education settings through consultation and performance feedback. School Psychology Quarterly, 12(1), 77–88.

Robbins, J. R., & Gutkin, T. B. (1994). Consultee and client remedial and preventive outcomes following consultation: Some mixed empirical results and directions for future researchers. Journal of Educational and Psychological Consultation, 5(2), 149–167.

Schoenwald, S. K., & Hoagwood, K. (2001). Effectiveness, transportability, and dissemination of interventions: What matters when?. Psychiatric Services, 52(9), 1190-1197.

Wickstrom, K. F., Jones, K. M., LaFleur, L. H., & Witt, J. C. (1998). An analysis of treatment integrity in school-based behavioral consultation. School Psychology Quarterly, 13(2), 141–154.